Estimated read time: 2 minutes

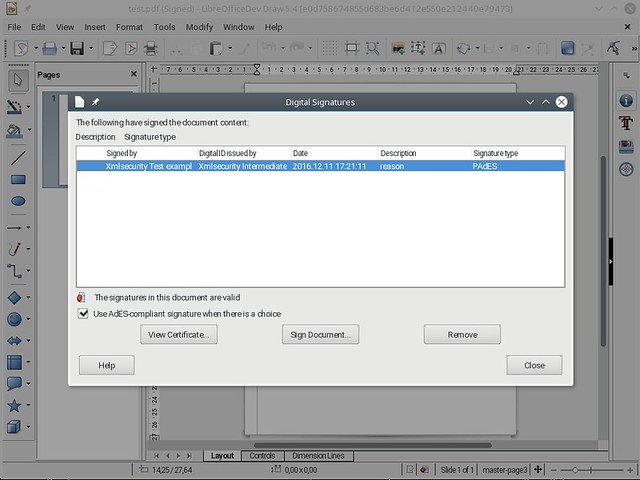

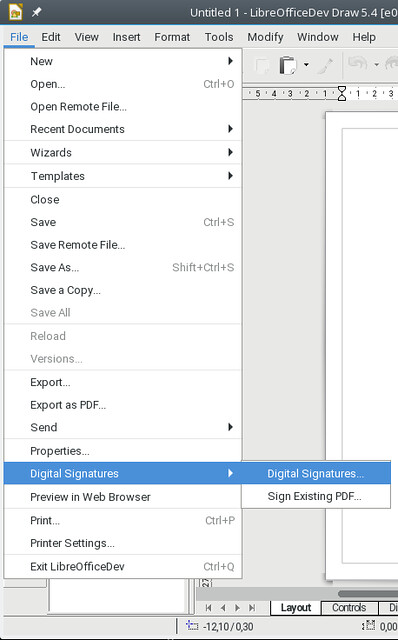

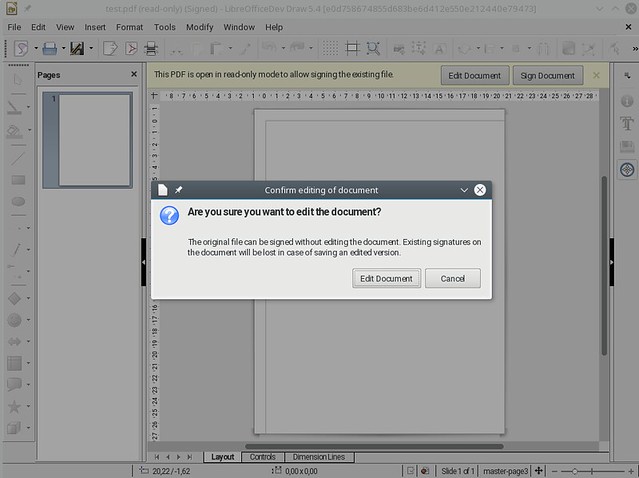

Last month a LibreOffice bugreport was filed, as the ODF signature created with Hungarian citizen eID cards is not something LibreOffice can verify. After a bit of research it seemed that LibreOffice and NSS (what we use for crypto work on Linux/macOS) is not a problem, but xmlsec’s NSS backend does not recognize ECDSA keys (RSA or DSA keys work fine).

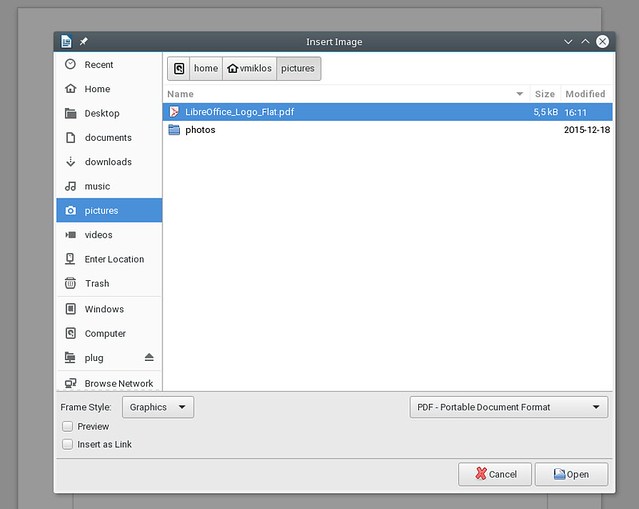

The xmlsec improvements happened in these pull requests:

-

prepare the xmlsec ECDSA tests, so that they can test not only openssl, but NSS as well

-

add initial ECDSA support (SHA256 hashing only)

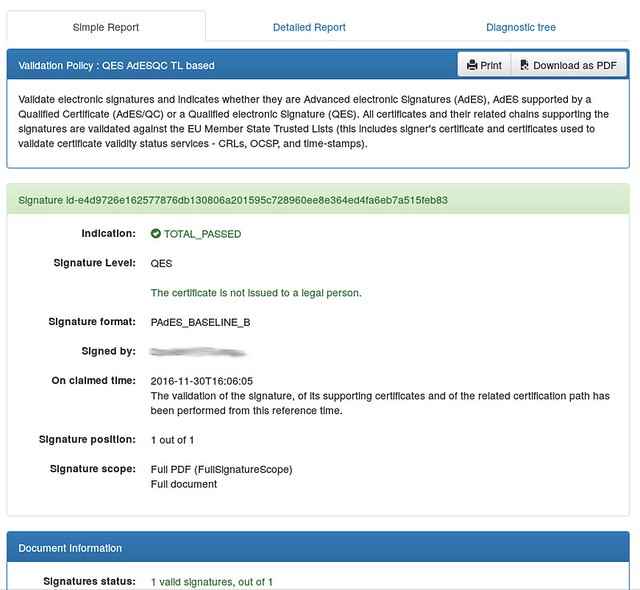

After this the xmlsec code looked good enough. I had to request an update of the bugdoc in the TDF bug twice, as the signature itself looked also incorrect initially:

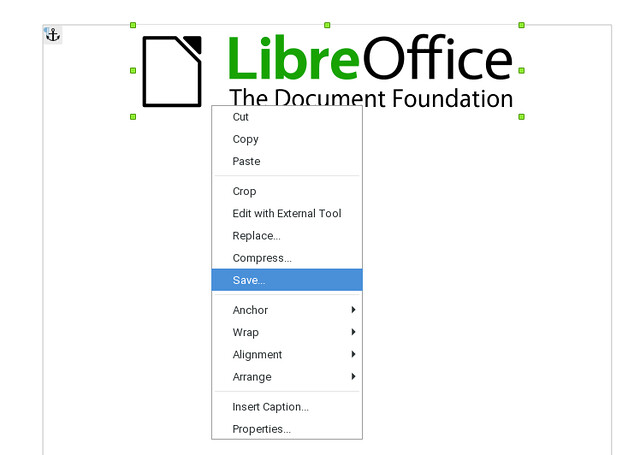

-

an attribute type in the signature that had no official abbreviation was described as "UNDEF" instead of the dotted decimal form

-

RFC3279 specifies that an ECDSA signature value in general should be ASN1-encoded in general, but RFC4050 is specific to XML digital signatures and that one says it should not be ASN1-encoded. The bugdoc was initially ASN1-encoded.

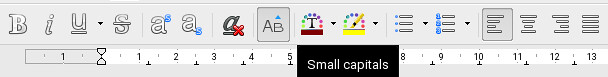

Finally a warning still remains: while trying to parse the text of the

<X509IssuerName> element, the dotted decimal form is still not parsed (see

this NSS bugreport). The

bug is confirmed on the mailing list, but no other progress have been made so

far.

Oh, and of course: Windows is still untouched, there a bigger problem remains: we use CryptoAPI (not CNG) there, and that does not support ECDSA at all. Hooray for open-source libs where you can add such support yourself. ;-)